Hello everyone, my name is Siheng Xiong. I am currently a final-year Ph.D. student in Machine Learning at the Georgia Institute of Technology, advised by Prof. Faramarz Fekri. Prior to this, I earned my Bachelor’s degree from Xi’an Jiaotong University and my Master’s degree from Shanghai Jiao Tong University.

My research focuses on post-training large language models for deliberate reasoning and planning, emphasizing model-based reasoning, long-context modelling, and process-level supervision.

🚀 Featured Projects

Language Agents

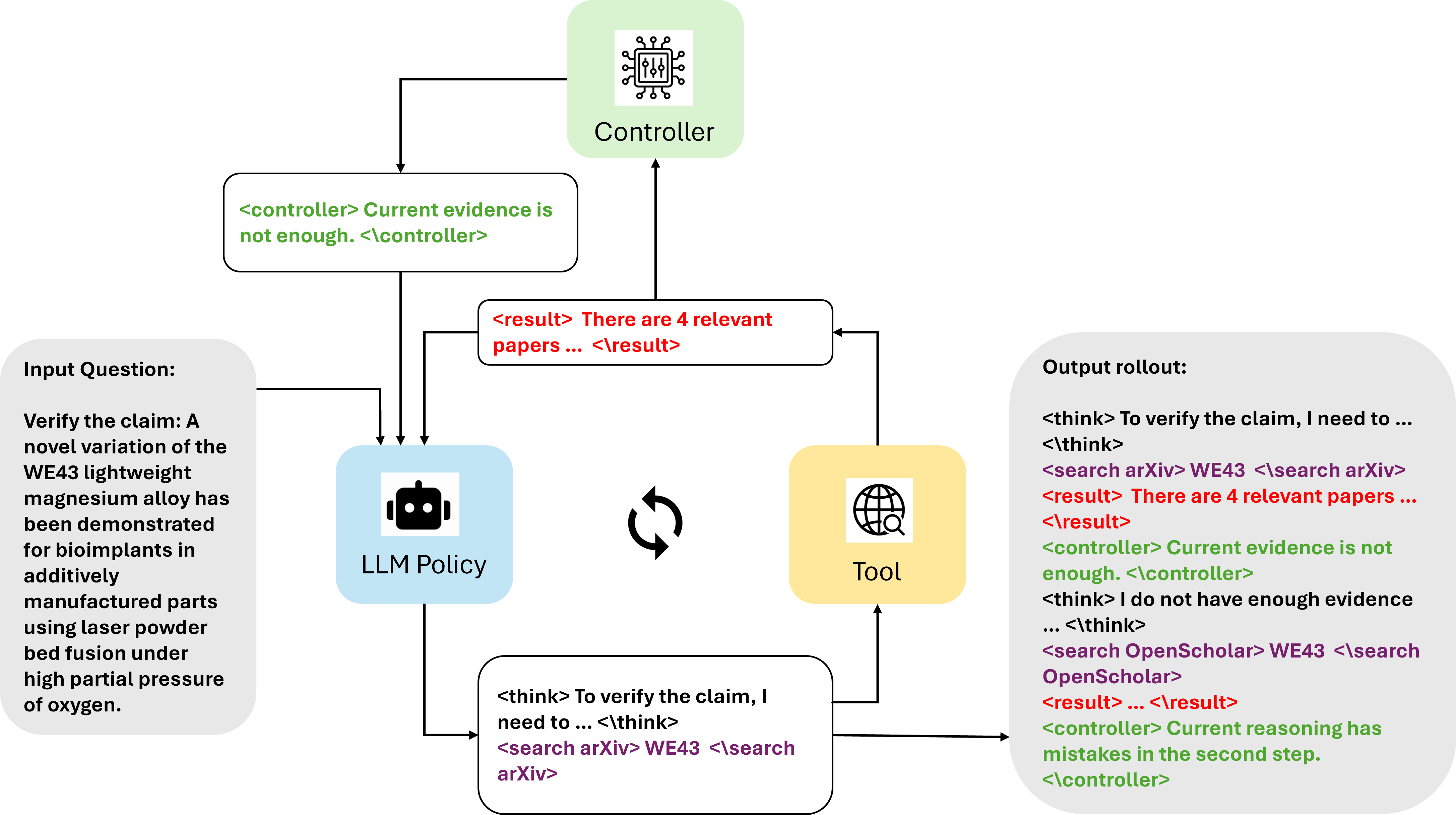

Scaling Search-Augmented Reasoning Agents via Adaptive Information Control

Siheng Xiong, Oguzhan Gungordu, Blair Johnson, James C. Kerce, Faramarz Fekri

DeepControl is an adaptive framework that optimizes information retrieval and expansion based on an agent’s reasoning state, enhancing performance across various benchmarks.

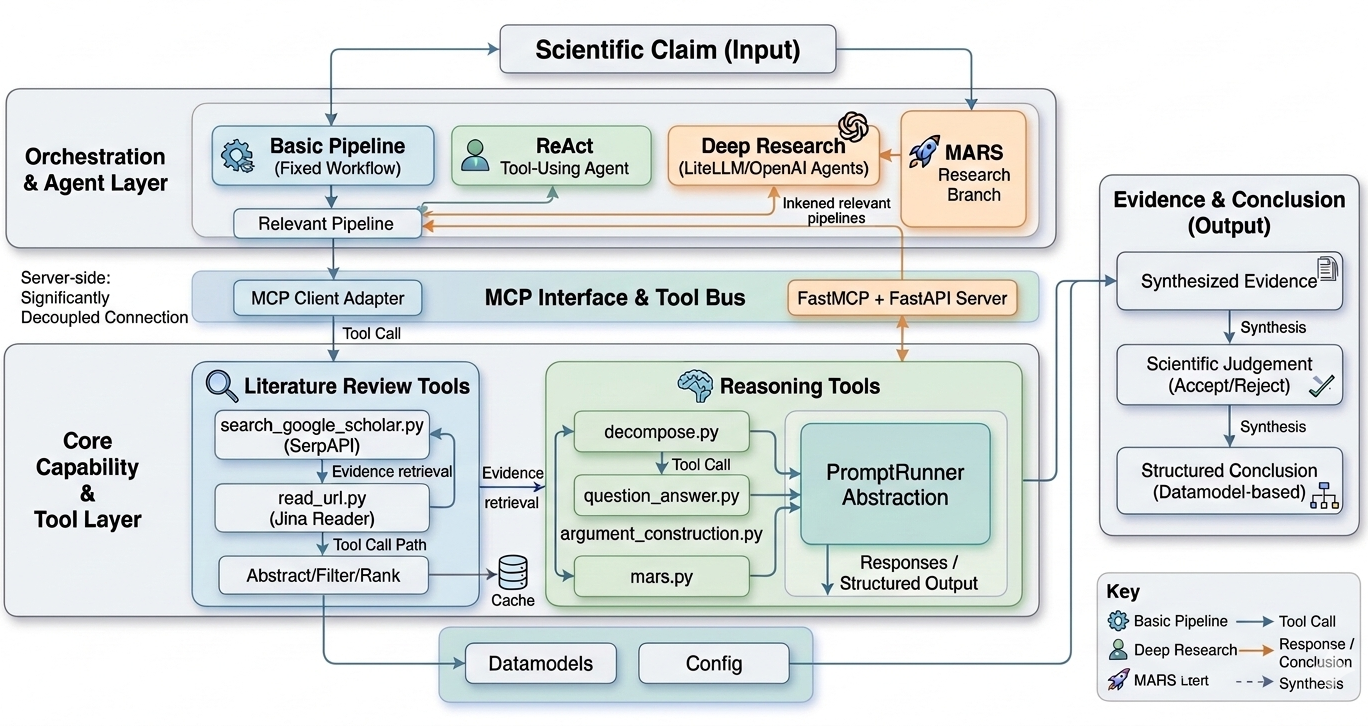

Evidence-Based Expert-Level Scientific Claim Verification

Siheng Xiong, Oguzhan Gungordu, Blair Johnson, Mika Okamoto, James C. Kerce, Faramarz Fekri

DeepVerify equips state-of-the-art language models with integrated search and reasoning capabilities to verify expert-level scientific claims using evidence.

Large Language Models for Reasoning and Planning

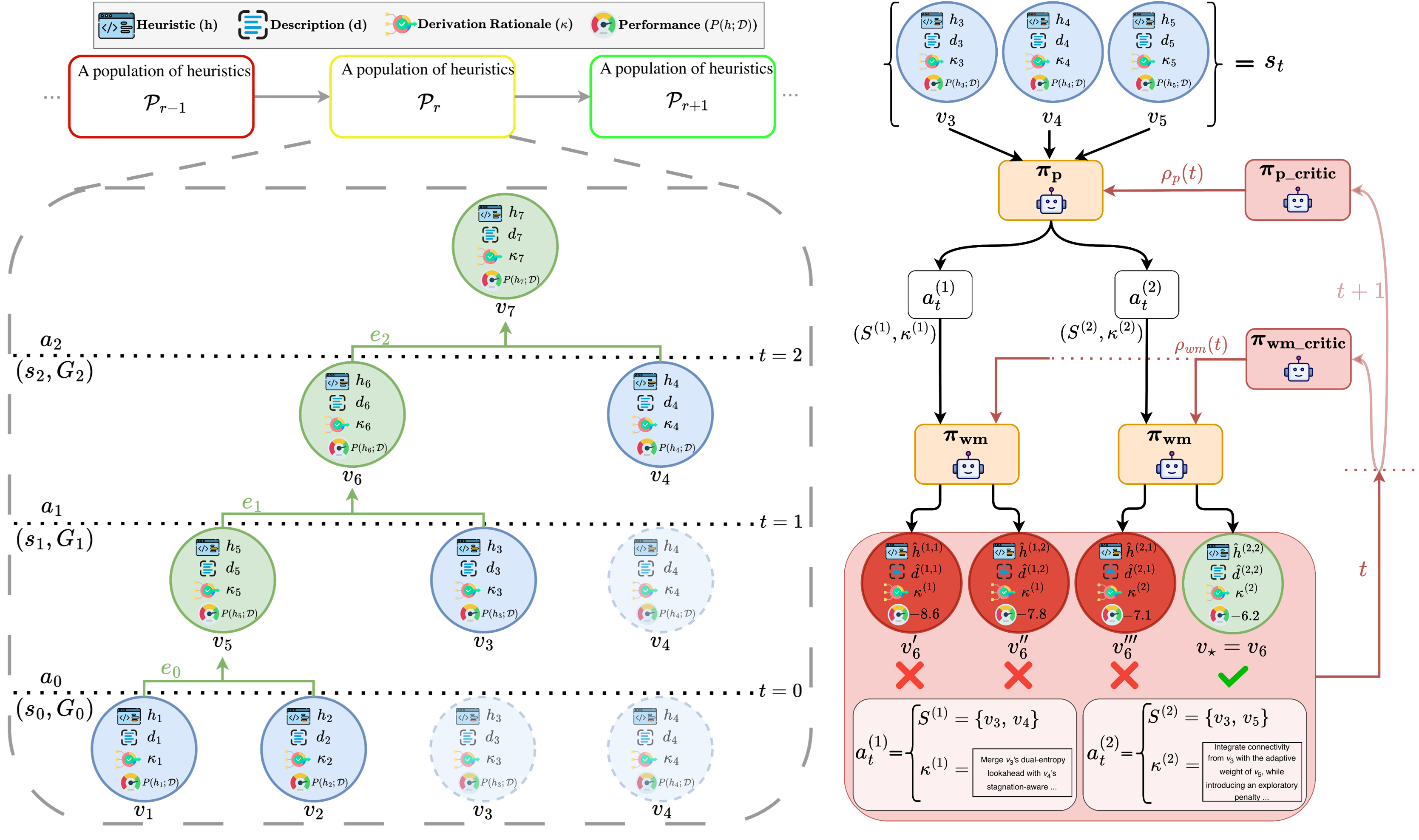

Planning through World Model for Automated Heuristic Design via Self-Evolving LLMs

Oguzhan Gungordu, Siheng Xiong, Faramarz Fekri

PathWise is a multi-agent framework that uses stateful memory and evolutionary actions to guide automated heuristic design, enabling structured reasoning, reuse of prior derivations, and controlled self-evolution of LLM-generated heuristics.

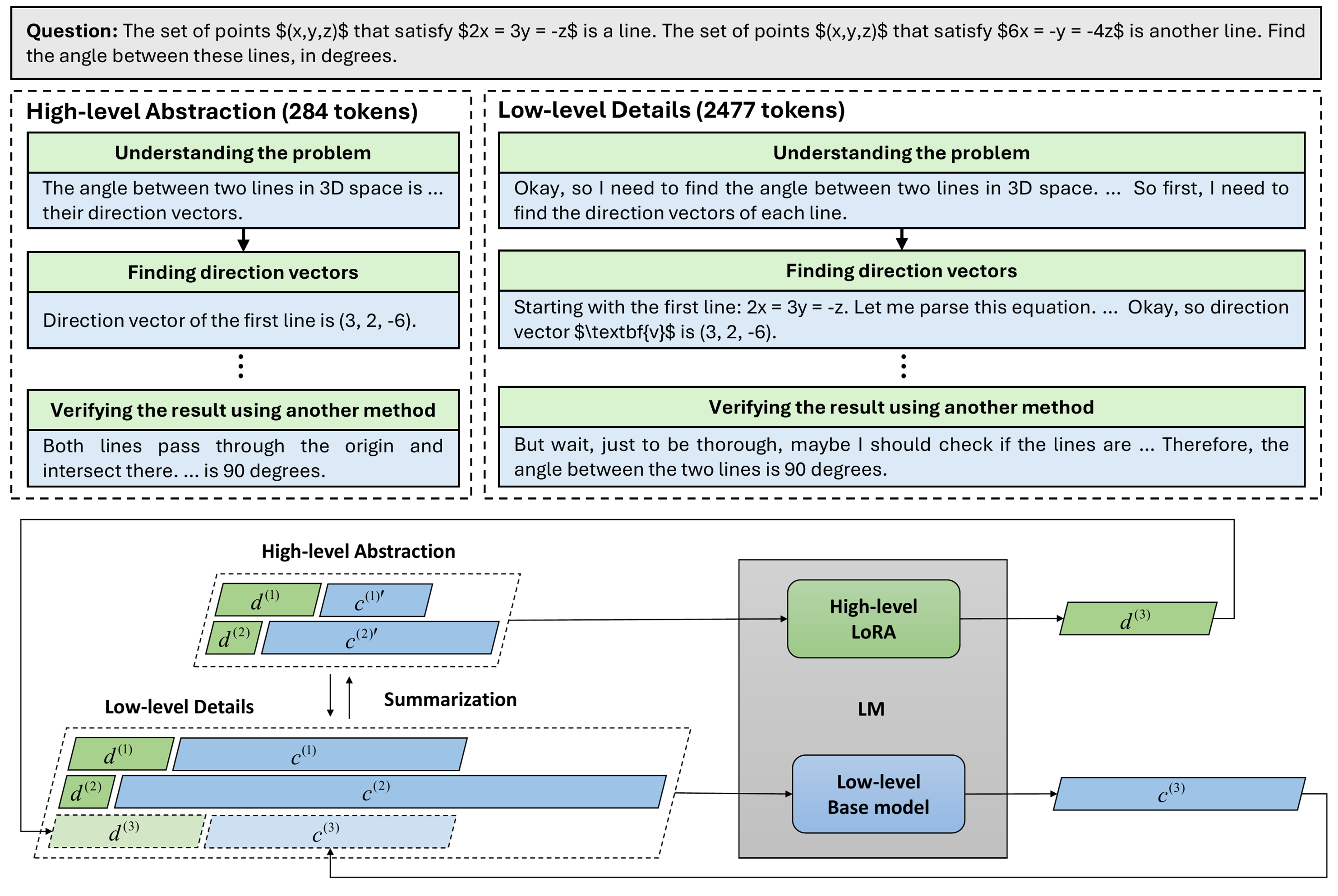

Enhancing Language Model Reasoning with Structured Multi-Level Modeling

Siheng Xiong, Ali Payani, Faramarz Fekri

Multi-Level Reasoning (MLR) is a lightweight planner-executor loop that improves long-horizon reasoning, using iterative Step-DPO for scalable supervision to enhance accuracy and stability.

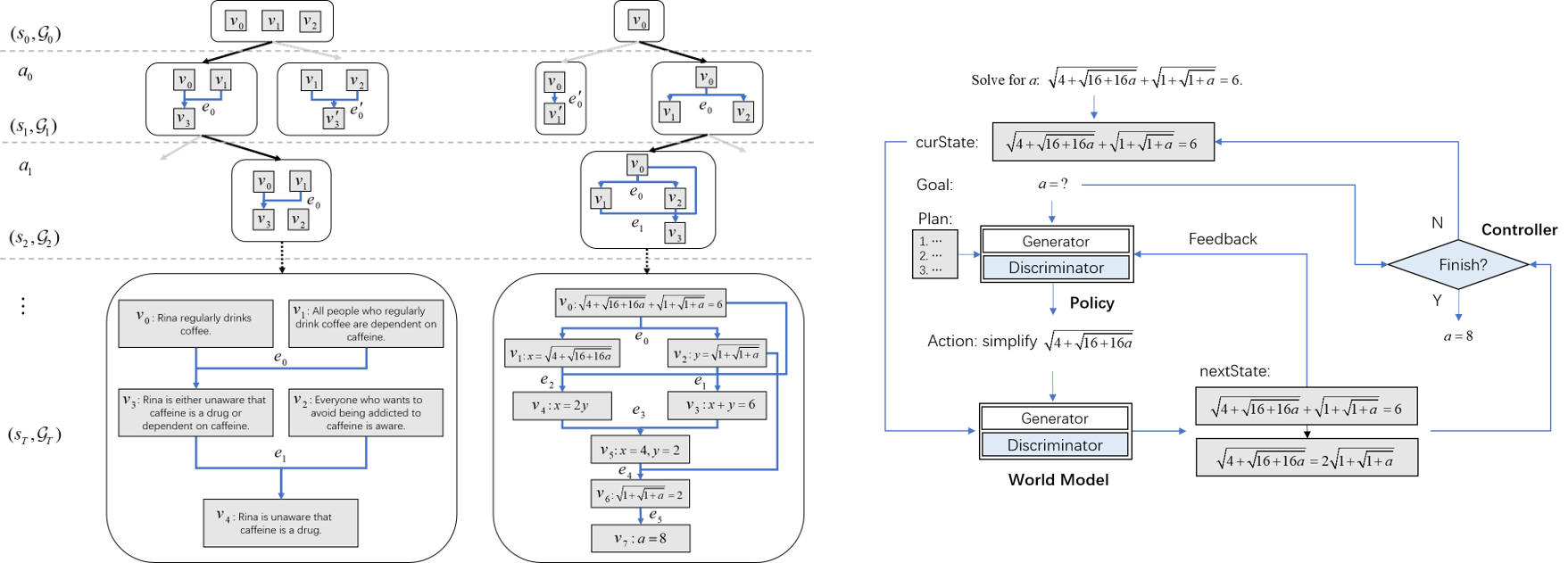

Deliberate Reasoning in Language Models as Structure-Aware Planning with an Accurate World Model

Siheng Xiong, Ali Payani, Yuan Yang, Faramarz Fekri

SWAP (Structure-Aware Planning) is a framework for multi-step reasoning with LMs, where the world model predicts the next state as a graph, guiding the policy model to propose the next action.

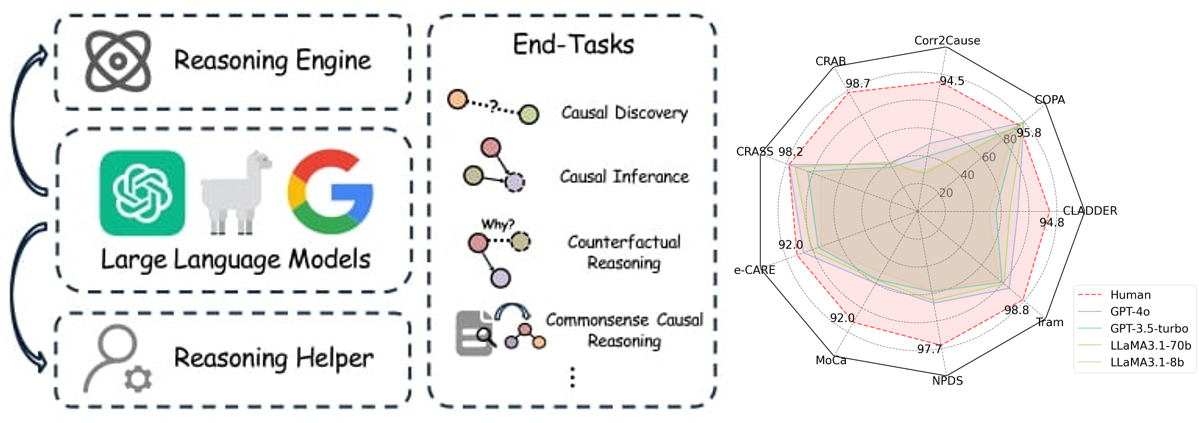

CausalEval: Towards Better Causal Reasoning in Language Models

Longxuan Yu*, Delin Chen*, Siheng Xiong*, Qingyang Wu, Dawei Li, Zhikai Chen, Xiaoze Liu, Liangming Pan

We provide a comprehensive review of research aimed at enhancing LMs for causal reasoning. We evaluate the performance of different LMs and methods on various causal reasoning tasks, providing key findings and in-depth analysis.

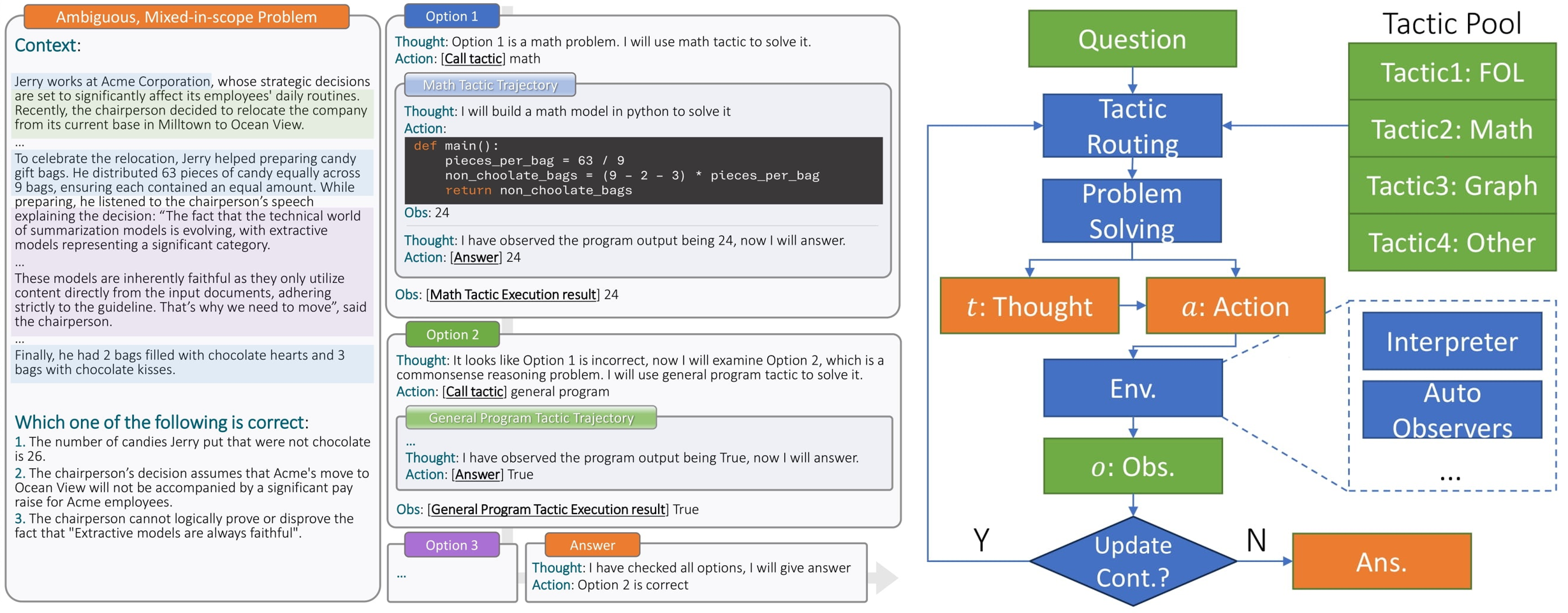

Can LLMs Reason in the Wild with Programs?

Yuan Yang, Siheng Xiong, Ali Payani, Ehsan Shareghi, Faramarz Fekri

Tiger is a TactIc-Guided ReasonER designed to tackle reasoning-in-the-wild tasks by generating and refining programs. It learns from previous trajectories to iteratively improve program generation, enabling more effective reasoning (like OpenAI o1).

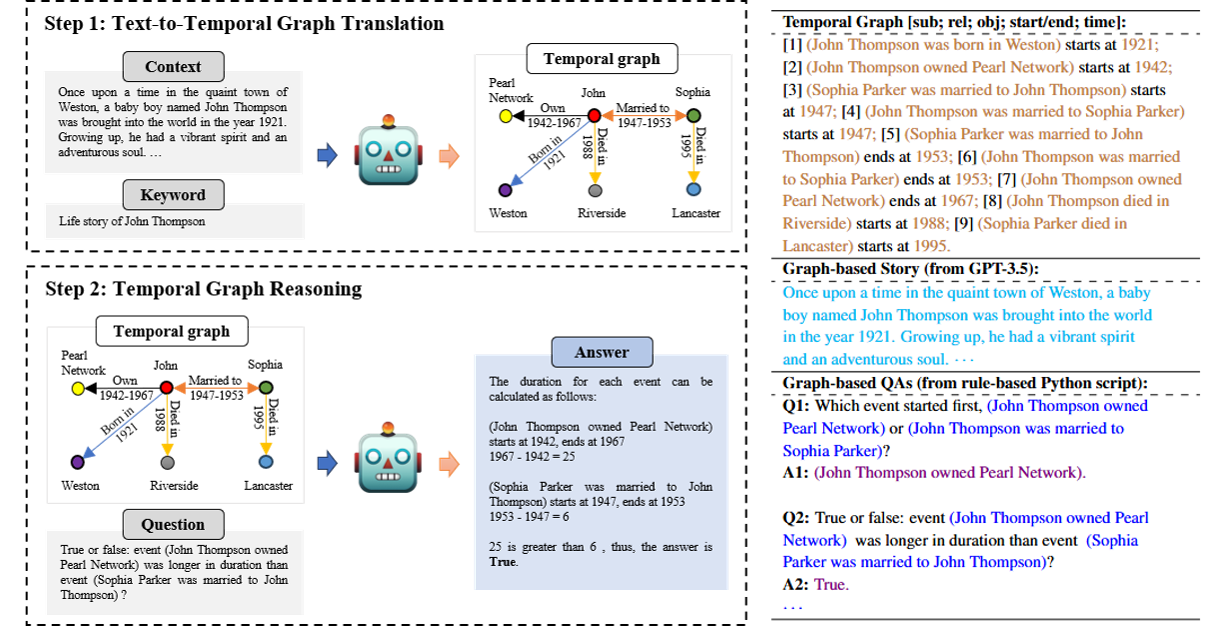

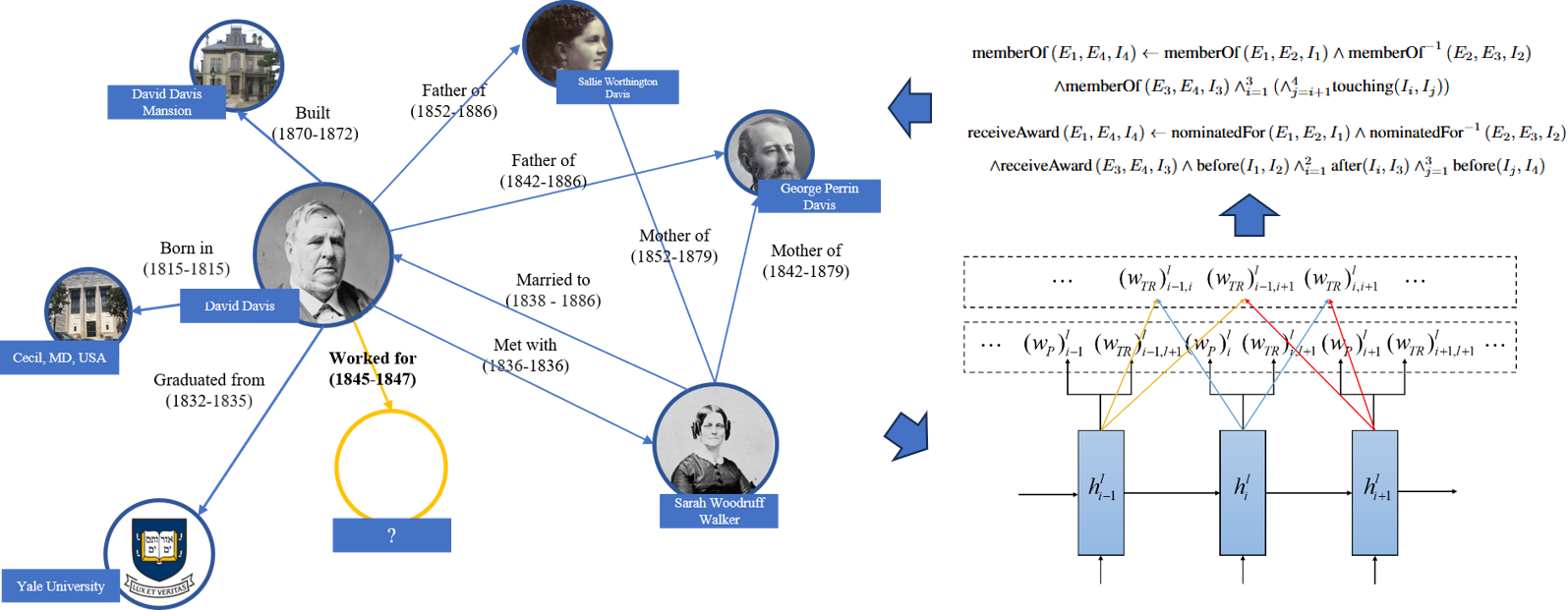

Large Language Models Can Learn Temporal Reasoning

Siheng Xiong, Ali Payani, Ramana Kompella, Faramarz Fekri

TG-LLM performs temporal reasoning in two steps: 1) Text-to-Temporal Graph translation: generate temporal graph given the context and keyword; 2) Temporal Graph Reasoning: perform deliberate CoT reasoning over the temporal graph.

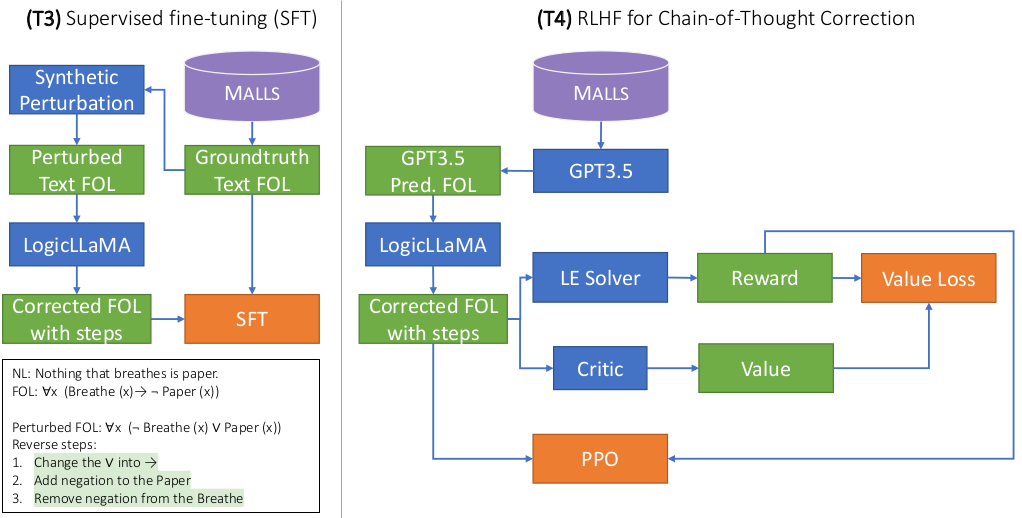

Harnessing the power of large language models for natural language to first-order logic translation

Yuan Yang, Siheng Xiong, Ali Payani, Ehsan Shareghi, Faramarz Fekri

LogicLLaMA can be used standalone or to correct previously generated rules by other models for the NL-FOL translation task.

Long Context Language Modelling

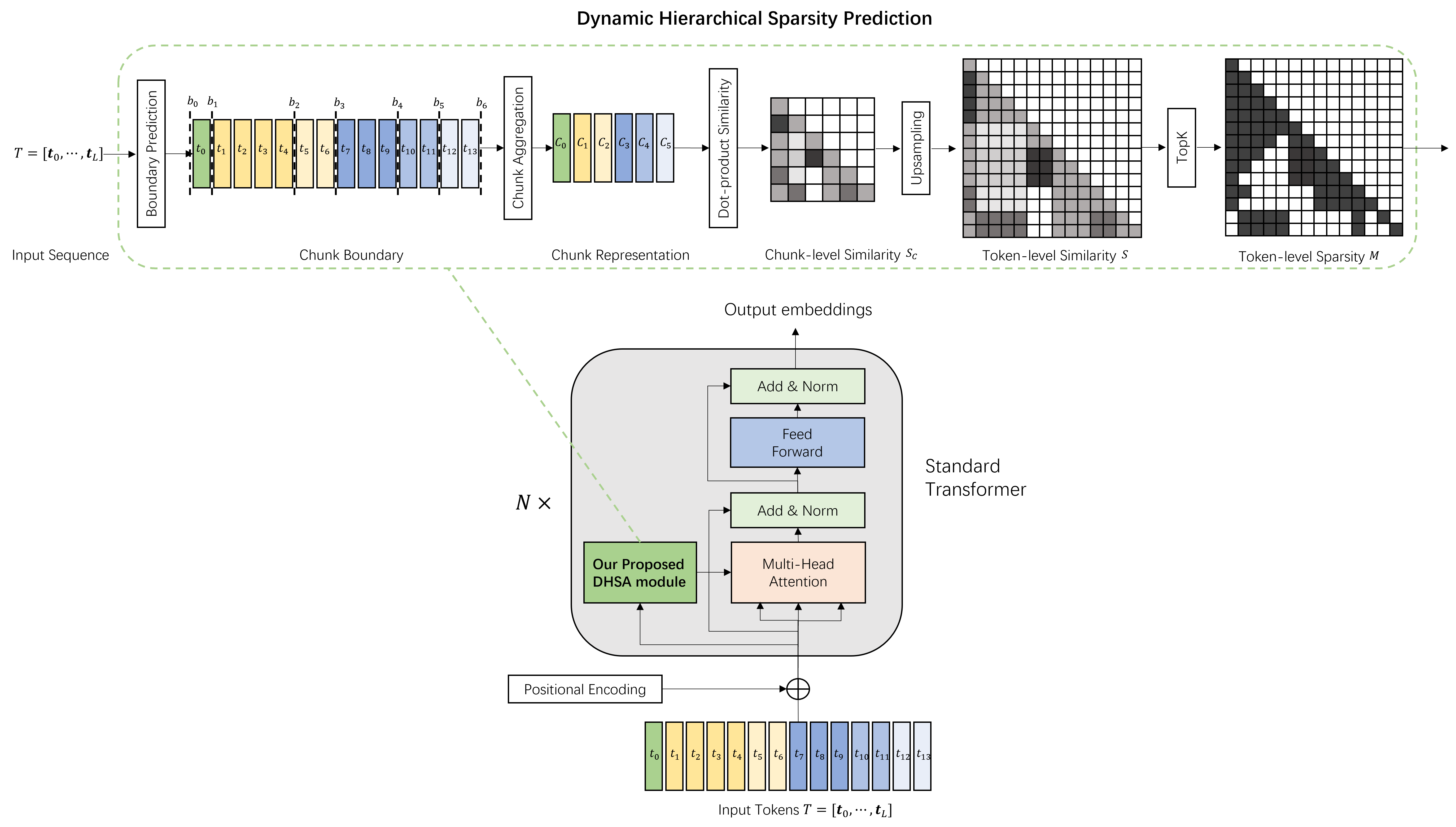

Long-Context Modeling with Dynamic Hierarchical Sparse Attention for On-Device LLMs

Siheng Xiong, Joe Zou, Faramarz Fekri, Yae Jee Cho

We introduce Dynamic Hierarchical Sparse Attention (DHSA), a plug-in module for Transformers that improves efficiency by predicting token-level sparsity using chunk-level similarity, reducing latency and memory usage while maintaining performance.

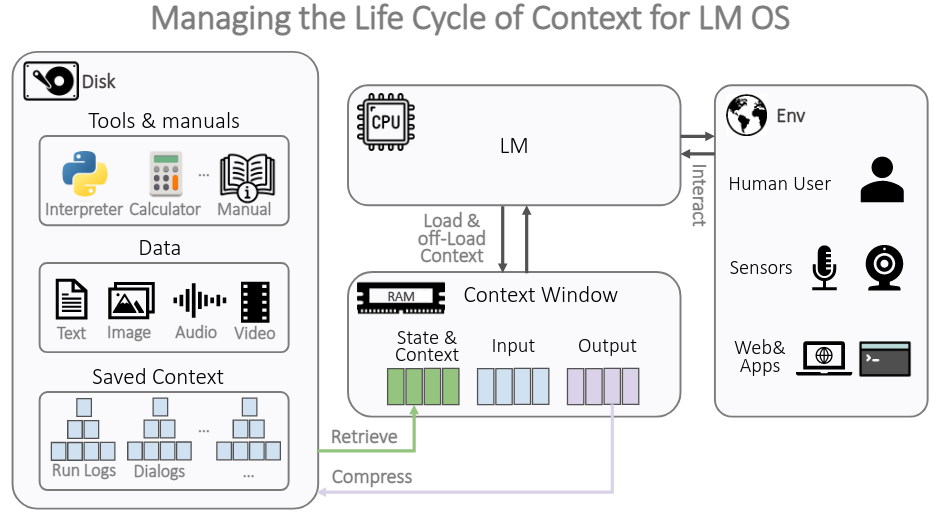

The Compressor-Retriever Architecture for Language Model OS

Yuan Yang, Siheng Xiong, Ehsan Shareghi, Faramarz Fekri

We introduce compressor-retriever, a model-agnostic architecture designed for life-long context management. Our approach exclusively uses the base model’s forward function to compress and retrieve context, ensuring end-to-end differentiability.

Temporal Knowledge Graph Reasoning

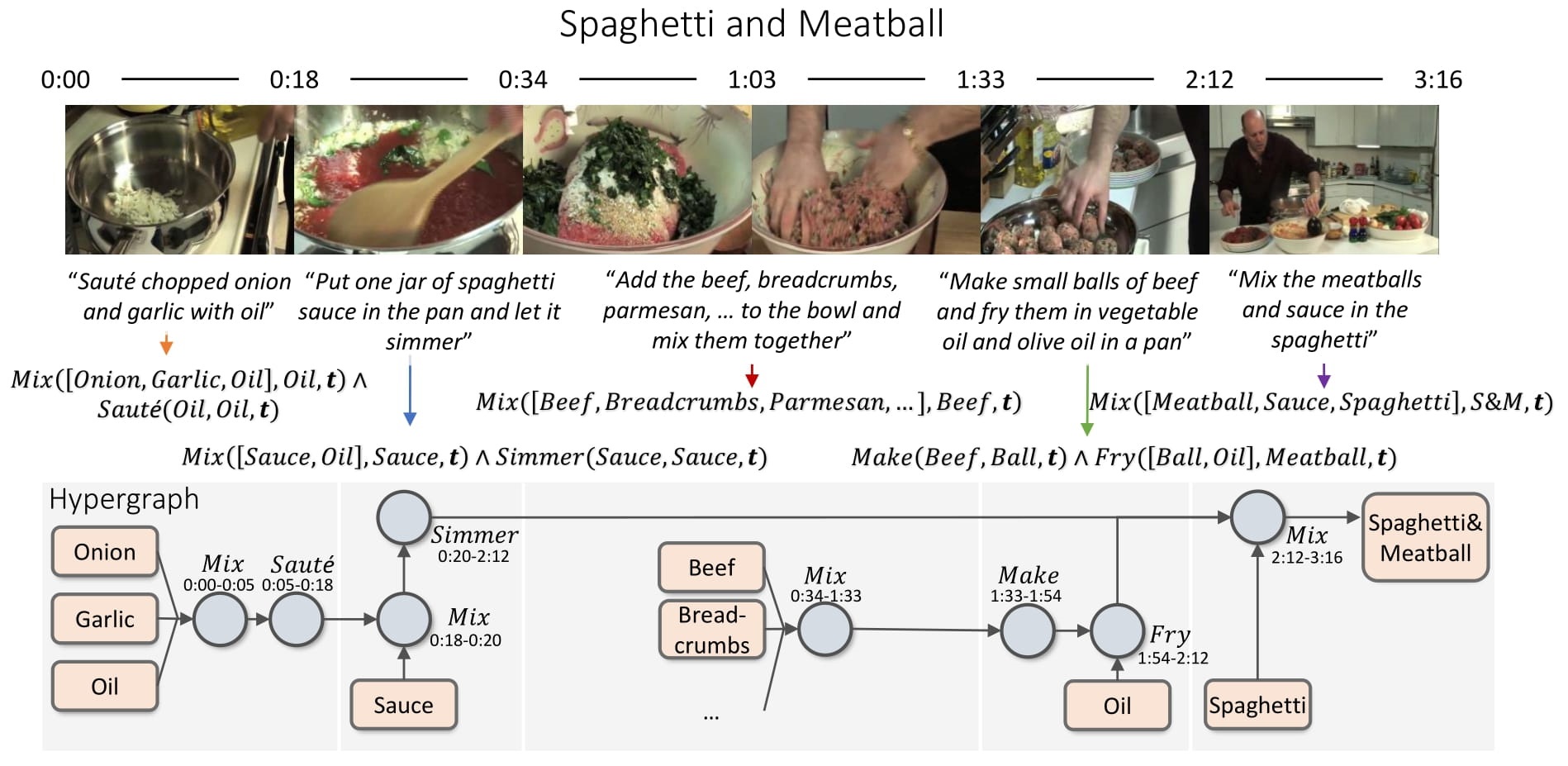

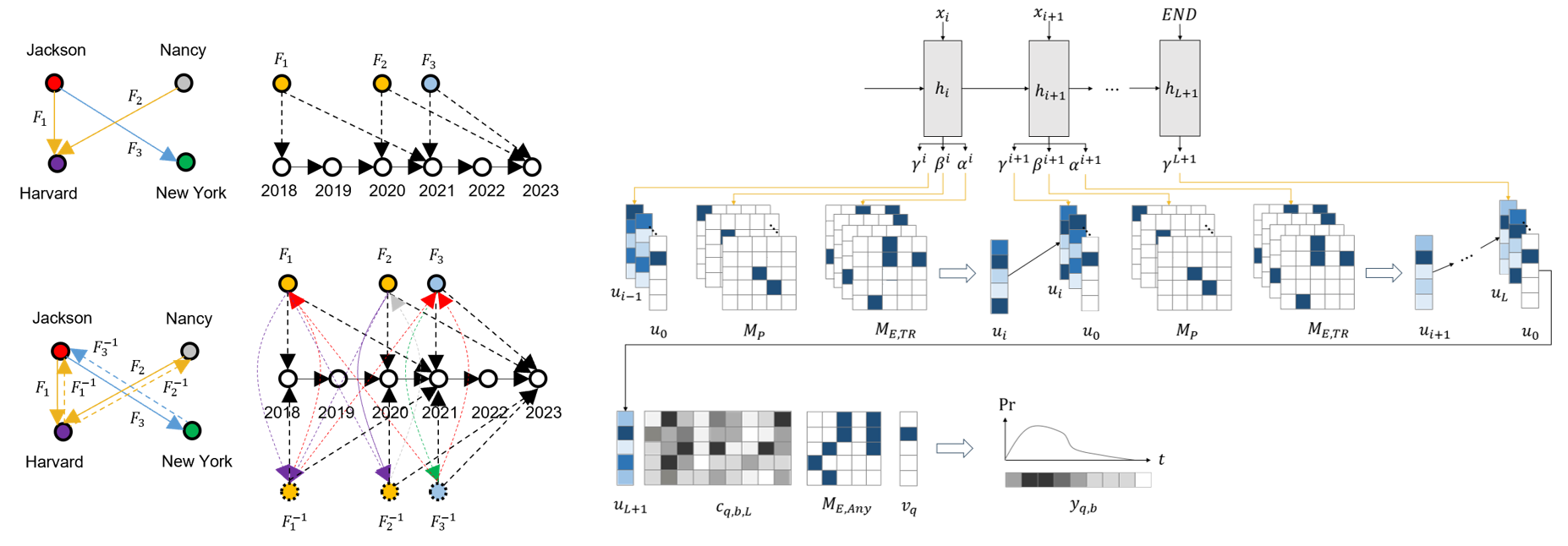

TILR: Temporal Inductive Logic Reasoning over Hypergraphs

Yuan Yang, Siheng Xiong, Ali Payani, James C Kerce, Faramarz Fekri

TILR is a reasoning framework that detects inductive patterns in temporal data via neural-logic methodology. The framework aims to assist the training of modern ML models by inducing patterns for accurate grounding with fewer data.

TEILP: Time prediction over knowledge graphs via logical reasoning

Siheng Xiong, Yuan Yang, Ali Payani, James C Kerce, Faramarz Fekri

TEILP extends TILP by converting TKGs into temporal event knowledge graphs (TEKGs) and developing a differentiable random walk approach with conditional probability density functions for time prediction based on query intervals.

TILP: Differentiable learning of temporal logical rules on knowledge graphs

Siheng Xiong, Yuan Yang, Faramarz Fekri, James Clayton Kerce

TILP is the first differentiable framework for learning temporal logical rules, using a constrained random walk mechanism and temporal operators to model key temporal features.

📝 Selected Publications

📝 Preprints

Preprint 2026Scaling Search-Augmented Reasoning Agents via Adaptive Information Control Siheng Xiong, Oguzhan Gungordu, Blair Johnson, James C. Kerce, Faramarz Fekri [Repo]Preprint 2026Planning through World Model for Automated Heuristic Design via Self-Evolving LLMs Oguzhan Gungordu, Siheng Xiong, Faramarz Fekri [Repo]Preprint 2025Enhancing Long Chain-of-Thought Reasoning with Multi-Path Planning with Aggregation Siheng Xiong, Ali Payani, Faramarz Fekri [Repo]Preprint 2025Long-Context Modeling with Dynamic Hierarchical Sparse Attention for Memory-Constrained LLM Inference Siheng Xiong, Joe Zou, Faramarz Fekri, Yae Jee Cho [Repo]Preprint 2024The Compressor-Retriever Architecture for Language Model OS Yuan Yang, Siheng Xiong, Ehsan Shareghi, Faramarz Fekri [Repo]

📝 Published

ICLR 2026 @ SPOTScaling Search-Augmented Reasoning Agents via Adaptive Information Control Siheng Xiong, Oguzhan Gungordu, Blair Johnson, James C. Kerce, Faramarz Fekri [Repo]ICLR 2026Enhancing Language Model Reasoning with Structured Multi-Level Modeling Siheng Xiong, Ali Payani, Faramarz Fekri [Repo]NeurIPS 2025 @ ERLong-Context Modeling with Dynamic Hierarchical Sparse Attention for On-Device LLMs Siheng Xiong, Joe Zou, Faramarz Fekri, Yae Jee Cho [Repo]ACS 2025Deliberate Planning in Language Models with Symbolic Representation Siheng Xiong, Zhangding Liu, Jieyu Zhou, Yusen Su [Repo]ACL 2025 (main)Deliberate Reasoning in Language Models as Structure-Aware Planning with an Accurate World Model Siheng Xiong, Ali Payani, Yuan Yang, Faramarz Fekri [Repo]NAACL 2025 (main)CausalEval: Towards Better Causal Reasoning in Language Models Longxuan Yu*, Delin Chen*, Siheng Xiong*, Qingyang Wu, Qingzhen Liu, Dawei Li, Zhikai Chen, Xiaoze Liu, Liangming Pan [Repo]EMNLP 2024Can LLMs Reason in the Wild with Programs? Yuan Yang, Siheng Xiong, Ali Payani, Ehsan Shareghi, Faramarz Fekri [Repo]IJCAI 2024Temporal Inductive Logic Reasoning over Hypergraphs Yuan Yang, Siheng Xiong, Ali Payani, James C Kerce, Faramarz Fekri [Repo]ACL 2024 (main)Large Language Models Can Learn Temporal Reasoning Siheng Xiong, Ali Payani, Ramana Kompella, Faramarz Fekri [Repo]ACL 2024 (main)Harnessing the power of large language models for natural language to first-order logic translation Yuan Yang, Siheng Xiong, Ali Payani, Ehsan Shareghi, Faramarz Fekri [Repo]AAAI 2024(Oral) TEILP: Time prediction over knowledge graphs via logical reasoning Siheng Xiong, Yuan Yang, Ali Payani, James C Kerce, Faramarz Fekri [Repo]ICLR 2023TILP: Differentiable learning of temporal logical rules on knowledge graphs Siheng Xiong, Yuan Yang, Faramarz Fekri, James Clayton Kerce [Repo]

🎖 Honors and Awards

- China National Scholarship (Top 1% Rank)

- China UHV Scholarship (Top 1% Rank)

- Kaggle Santander Value Prediction Challenge, Silver Medal (Top 3.4% Rank)

📖 Education

- Georgia Institute of Technology, Ph.D. in Machine Learning

- Shanghai Jiao Tong University, M.S. in Electrical and Computer Engineering

- Xi’an Jiaotong University, B.S. in Electrical and Computer Engineering

💻 Experience

- 2025.05 - 2025.08, Applied Research Intern, Google, Sunnyvale, California

- 2023.09 - 2024.04, Research Intern, Cisco Research, San Jose, California

- 2020.05 - 2021.01, Research Student Assistant, Rutgers University (Mentor: Dimitris N. Metaxas), New Brunswick, New Jersey

📄 Services

- Program Committee for AAAI

- Conference Reviewer for NeurIPS, ICML, ICLR, ACL, EMNLP, NAACL, EACL, KDD, AAAI

- Journal Reviewer for ACM TIST